Object Tracker User Guide

Version 1.3 | Published April 10, 2024 ©

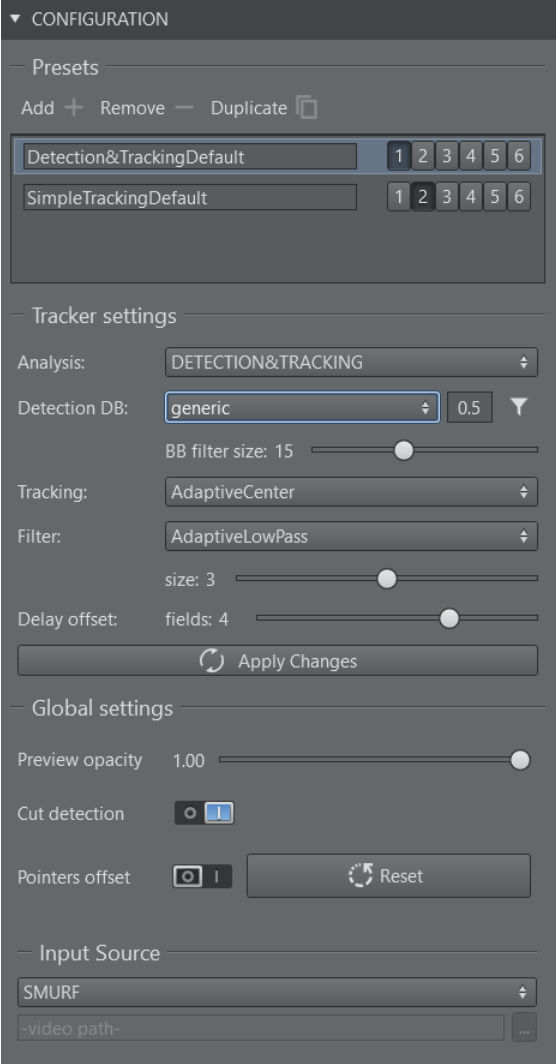

Configuration Panel

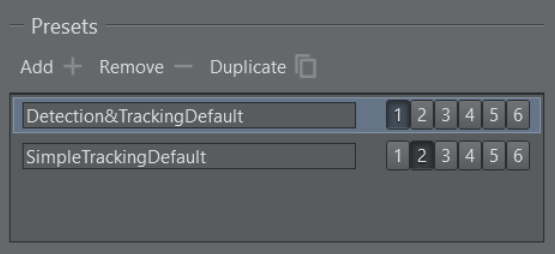

Presets

It is possible to create multiple presets where you can select which tracking algorithm to use and which neural network to work with for the AI tracker. Each algorithm has specific settings which are exposed in the Tracker Settings. One or more Tracking ID(s) can be assigned to a preset. All active presets (the ones that have at least one tracking ID assigned) run altogether and the Object Tracker switches algorithms seamlessly according with the current tracking ID.

Tracker Settings

Analysis

Manual

This mode allows you to track manually using the mouse pointer, touch screen or pen input.

Detection and Tracking

This option selects the AI tracker based on a neural network.

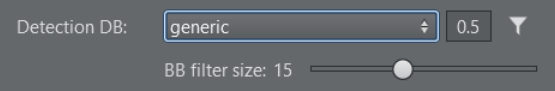

Detection DB

The network to be used can be selected under Detection Types.

The generic network is always available and offers a couple of standard objects that it recognizes (for example, people, cars, etc.).

The number besides the dropdown represents a threshold in the range of 0.0 to 1.0. It represents a factor of confidence of how well an object has been detected. The higher the value, the higher the probability the detection is correct. Object with a low confidence score can be ignored by using this threshold value. Depending on the quality of the images and the neural network, this value can be adjusted to improve the overall tracking.

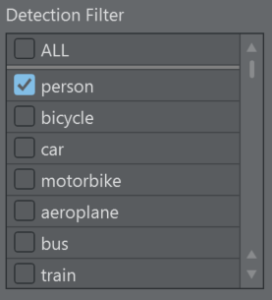

The list of available objects is shown if the button Detection Filter is pressed. This is the list of objects that are recognized by the neural network.

Detection Filter

Select which objects of you want to detect. In most cases, you would like to select one or two kind of objects in a video stream.

BB (Bounding Box) Filter Size

The bounding boxes undergo a different filtering than the actual tracking point as it is more noisy and is subject to more extreme and sudden changes over time (for example, a person walking). You can configure a filter size for smoothing the bounding boxes for the tracking output. It is particularly useful when graphics need to be aligned to the bounding box. If you're using the Simple Tracking, the bounding box size slider is disable since there are no bounding boxes.

Tracking

The Tracking Types dropdown lets you choose what kind refined tracking to use inside of the detected bounding box. The button next to the drop down sends the new settings to the Object Tracker without having to use the global initialize button.

-

AdaptiveCenter: Extracts color/luminance features around the center of the bounding box. A gravity force re-centers the tracking point toward the center of the bounding box over time to avoid drifting.

-

AdaptiveTop: Extracts color/luminance features towards the top area of the bounding box. This is typically used when tracking persons as the arms and legs might move too much for a stable tracking, while the area of the chest and head are more stable and yield better tracking results. As for the tracker above, a gravity force re-centers the tracking point toward the center of the bounding box over time to avoid drifting.

-

MagneticCenter: Works as AdaptiveCenter but when the object is not detected it uses the same algorithm of the Simple Tracking - Adaptive to try to follow the object; the downside is that the tracking is never lost, the operator has to manually turn off the tracking.

-

MagneticTop: Works as AdaptiveTop but when the object is not detected it uses the same algorithm of the Simple Tracking - Adaptive to try to follow the object; the downside is that the tracking is never lost, the operator has to manually turn off the tracking.

-

TrackerCenter: Extracts features around the center of the bounding box. A gravity force re-centers the tracking point toward the center of the bounding box over time to avoid drifting.

-

TrackerTop: Extracts features towards the top area of the bounding box. A gravity force re-centers the tracking point toward the center of the bounding box over time to avoid drifting.

-

Simple: Uses the center of the detected bounding box as tracked reference.

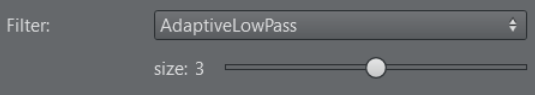

Filter

Filter Types

The filter types allow you to filter the tracked point over time. Several are available. The button next to the drop down sends the new settings to the Object Tracker without having to use the global initialize button.

-

AdaptiveLowPass: Minimizes jitter and lag using a low pass filter.

-

MovingAverage: Calculates the average of the last points.

-

ExponentialMovingAverage: Gives more weight to the most recent points. Similar to MovingAverage.

Size

Increasing the size of the filter helps to smooth noisy results.

Warning: Be very careful when using filters. In most cases, they lead to undesired lags especially for rapid and sudden changes in direction of the tracked object. The larger the size of the filter, the larger is the lag. However, the filters might be very beneficial on smooth and slow movements of objects.

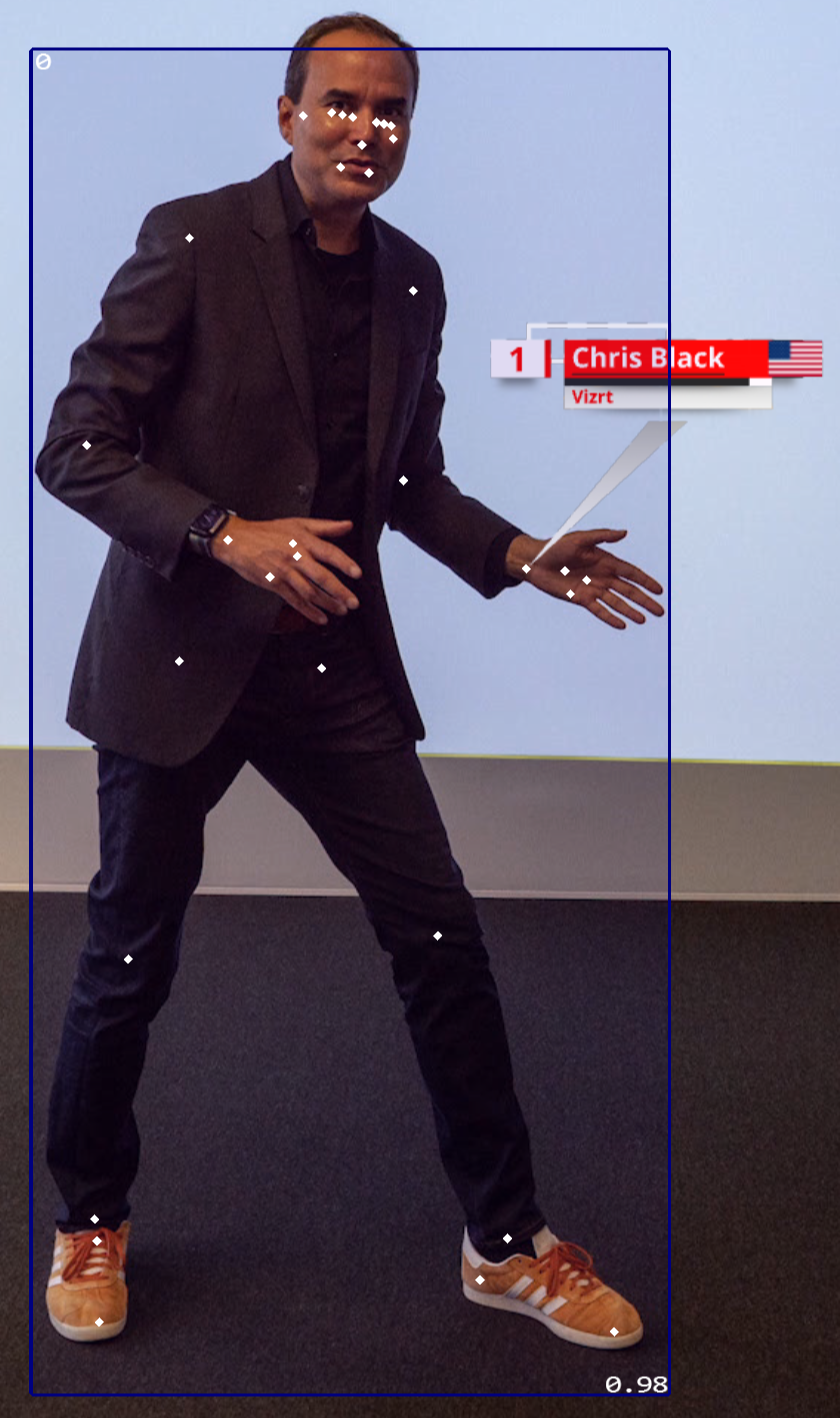

Detected object's bounding box

An example of a bounding box of a detected person object.

Simple Tracking

Tracking Types

This option tracks objects without the aid of a neural network: it uses the same algorithm that are used for the refined tracking in the Detection and Tracking.

-

Adaptive : Extracts color/luminance features around the clicked point.

-

KCF: Extracts generic features around the clicked item.

Filters

same as the Filter in "Detection&Tracking"

Face Tracking

This mode allows you to track up to two faces (the ones with the best results).

The points sent to Viz Engine(s) are:

-

Outline of the face

-

EyeLeft

-

EyeRight

-

Mouth

-

BetweenEyes

-

EyeCornerLeft

-

EyeCornerRight

-

PupilLeft

-

PupilRight

-

NoseTip

-

CheekLeft

-

CheekRight

-

MouthCornerLeft

-

MouthCornerRight

-

Chin

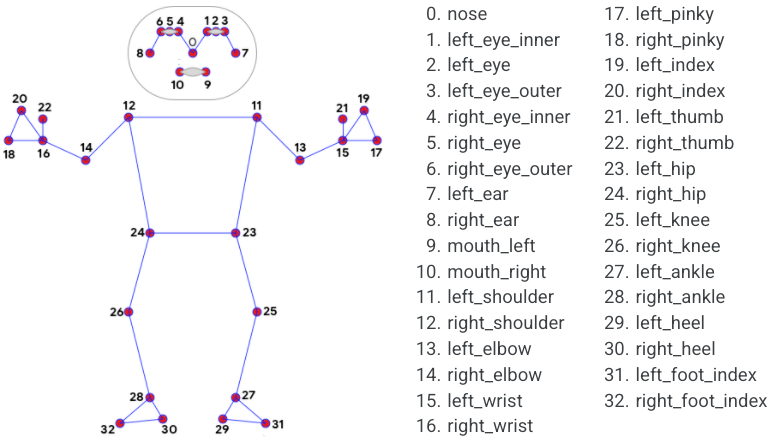

2D Pose Tracking

This mode allows you to track the pose of one person.

This is the map of the points sent to Viz Engine(s):

Delay Offset

![]()

The Object Tracker delays the results to match the video images with the tracking information; this slider increases (max +10) or decreases (min -10) the delay, in fields, applied. This can be useful to compensate the lag due to aggressive filtering.

Apply Changes: Please note that the settings that can be changed in the tracker panel do not get applied as you change them in the Object Tracker. A yellow triangle informs you if the parameters shown in the panel do not respect the values used by the running algorithms; some parameters can but updated pushing Apply Changes for other a re-initialization is needed. A tooltip tells you what you need to do.

![]()

Global Settings

Preview Opacity

The preview output that has been configured in Viz Arc can be rendered on top of the NDI stream coming from the Object Tracker. This allows the operator to preview the graphics on screen. The opacity of this preview can be adjusted here.

The preview is intended to verify the editorial graphics content and not the accuracy of the tracking.

Information: For performance reasons, the composed on-screen preview might not look the same as on the actual program output. Make sure the fill channel of the preview is black where it is supposed to be transparent. The timing between the underlying NDI stream and preview is not guaranteed to be correct. The tracking and the preview graphics might not match.

Cut Detecion

If enabled, when a video hard cut is detected all current trackers are lost.

Pointers Offset

It resets the pointers offset of each tracking input.

Input Source

Select SMURF to select the Engine's input running where the Object Tracker has been installed. When installing Viz Arc locally, you are able to select a file as well. This workflow is intended for testing only as it is not guaranteed to run in real-time.